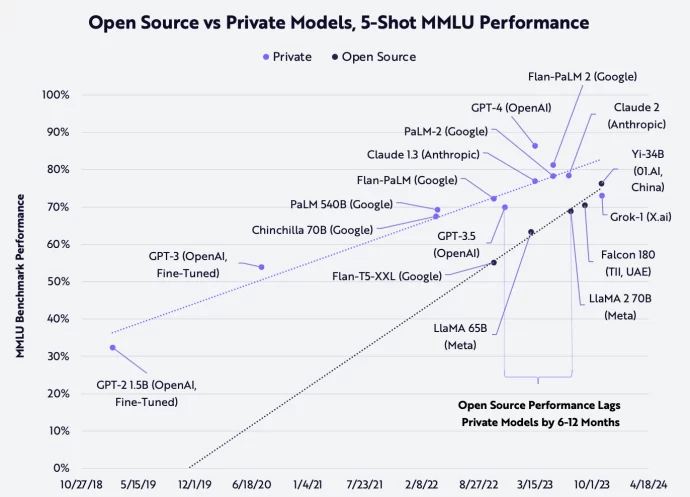

While the performance of GPT-4 Turbo and other closed AI models is improving, open-source models are gaining ground quickly. Recently, China’s 01.AI released an open-source model, Yi-34B, that outperformed its open-source peers on several benchmarks. On MMLU, a benchmark measuring basic knowledge across 57 subjects, for example, Yi-34B scored 76.3%, surpassing the 68.9% and 70.4% of other open-source models like Meta Platforms’ LLaMA2-70B and the TII’s Falcon-180B, respectively. Transparent and free, open-source models seem to be benefiting from the contributions of diverse developer groups that are accessing and contributing to the leading foundation models.

https://ark-invest.com/newsletter_item/1-openais-improved-chatgpt-should-delight-both-expert-and-novice-developers

Jaron Lanier Looks into AI’s Future

Las matemáticas del OS no funcionan como la gente cree, porque se termina produciendo hiper-centralización en lugar de descentralización. https://www.bloomberg.com/news/videos/2023-11-16/jaron-lanier-looks-into-ai-s-future

Running OS IA Models on Your computer

How to Run Open Source Language Models On Your Computer

We can use ollama.ai to run open source large language models locally. I can’t promise that the output will be up to ChatGPT standards, but in a pinch, it will do. You can run the latest Mistral, Mixtral, Llama and Codellama models locally, without an H100 cluster. https://www.youtube.com/watch?v=Kc-q6xNTBUg

Open Source LLM – OS vs Private models graph – Llama 3 como referencia en open source AI models – Meta se hizo OS y hace de contrapeso a los cerrados – Qué pasa con Mistral – LeCunn dentro es garantía de éxito – Huggingface 350K OS models – Quantitazion techniques – Mix of experts as key in OS, use pieces of the models not the whole model – NASA , Meta, IBM, Apple !!! in OS models

From OpenAI to Open Source in 5 Minutes Tutorial (LM Studio + Python)

How to switch from OpenAI API to Open Source Model in 5 minutes. We test out LM studio local server to download and use the Dolphin Mistral 7B open source LLM in Python.

We can use ollama.ai to run open source large language models locally. I can’t promise that the output will be up to ChatGPT standards, but in a pinch, it will do. You can run the latest Mistral, Mixtral, Llama and Codellama models locally, without an H100 cluster

Comments by Luis G de la Fuente